Published On May 10, 2024

April 18, 2024

Speaker: Nathan Lambert, Allen Institute for AI (AI2)

Aligning Open Language Models

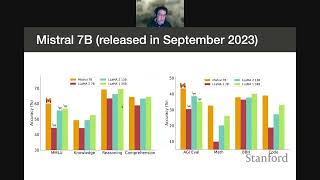

Since the emergence of ChatGPT there has been an explosion of methods and models attempting to make open language models easier to use. This talk retells the major chapters in the evolution of open chat, instruct, and aligned models, covering the most important techniques, datasets, and models. Alpaca, QLoRA, DPO, PPO, and everything in between will be covered. The talk will conclude with predictions and expectations for the future of aligning open language models. Slides posted here: https://docs.google.com/presentation/...

All the models in the figures are in this HuggingFace collection: https://huggingface.co/collections/na...

About the speaker:

Nathan Lambert is a Research Scientist at the Allen Institute for AI focusing on RLHF and the author of Interconnects.ai. Previously, he helped build an RLHF research team at HuggingFace. He received his PhD from the University of California, Berkeley working at the intersection of machine learning and robotics. He was advised by Professor Kristofer Pister in the Berkeley Autonomous Microsystems Lab and Roberto Calandra at Meta AI Research.

More about the course can be found here: https://web.stanford.edu/class/cs25/

View the entire CS25 Transformers United playlist: • Stanford CS25 - Transformers United