Published On Dec 14, 2023

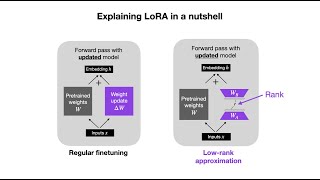

In this video, I dive into how LoRA works vs full-parameter fine-tuning, explain why QLoRA is a step up, and provide an in-depth look at the LoRA-specific hyperparameters: Rank, Alpha, and Dropout.

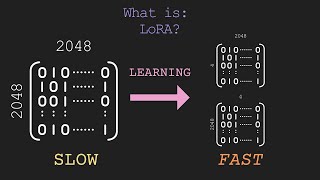

0:26 - Why We Need Parameter-efficient Fine-tuning

1:32 - Full-parameter Fine-tuning

2:19 - LoRA Explanation

6:29 - What should Rank be?

8:04 - QLoRA and Rank Continued

11:17 - Alpha Hyperparameter

13:20 - Dropout Hyperparameter

Ready to put it into practice? Try LoRA fine-tuning at www.entrypointai.com

show more