Published On Dec 19, 2023

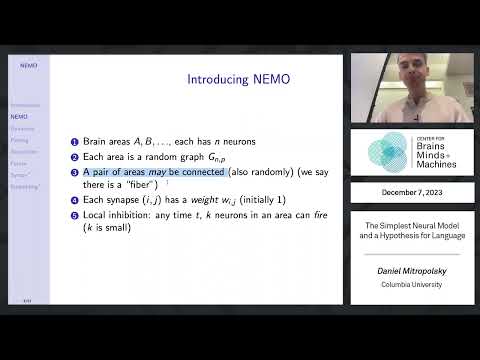

Daniel Mitropolsky, Columbia University

Abstract: How do neurons, in their collective action, beget cognition, as well as intelligence and reasoning? As Richard Axel recently put it, we do not have a logic for the transformation of neural activity into thought and action; discerning this logic as the most important future direction of neuroscience. I will present a mathematical neural model of brain computation called NEMO, whose key ingredients are spiking neurons, random synapses and weights, local inhibition, and Hebbian plasticity (no backpropagation). Concepts are represented by interconnected co-firing assemblies of neurons that emerge organically from the dynamical system of its equations. It turns out it is possible to carry out complex operations on these concept representations, such as copying, merging, completion from small subsets, and sequence memorization. NEMO is a neuromorphic computational system that, because of its simplifying assumptions, can be efficiently simulated on modern hardware. I will present how to use NEMO to implement an efficient parser of a small but non-trivial subset of English, and a more recent model of the language organ in the baby brain that learns the meaning of words, and basic syntax, from whole sentences with grounded input. In addition to constituting hypotheses as to the logic of the brain, we will discuss how principles from these brain-like models might be used to improve AI, which, despite astounding recent progress, still lags behind humans in several key dimensions such as creativity, hard constraints, energy consumption.

https://cbmm.mit.edu/news-events/even...